基于双时相特征筛选的遥感图像变化检测模型

|

王利玲(1999— ),女,甘肃兰州人,硕士生,主要从事基于无人机(遥感)的异常信息监测与评估、基于人工智能技术的语义分割与变化检测应用的研究。E-mail: 1346149688@qq.com |

收稿日期: 2023-07-06

修回日期: 2023-09-21

网络出版日期: 2023-11-02

基金资助

国家重点研发计划项目(2022YFB3903604)

甘肃省自然科学基金项目(21JR7RA310)

兰州交通大学青年科学基金(2021029)

Remote Sensing Change Detection Model Based on Dual Temporal Feature Screening

Received date: 2023-07-06

Revised date: 2023-09-21

Online published: 2023-11-02

Supported by

National Key Research and Development Program of China(2022YFB3903604)

Gansu Natural Science Foundation Project(21JR7RA310)

Youth Science Foundation of Lanzhou Jiaotong University(2021029)

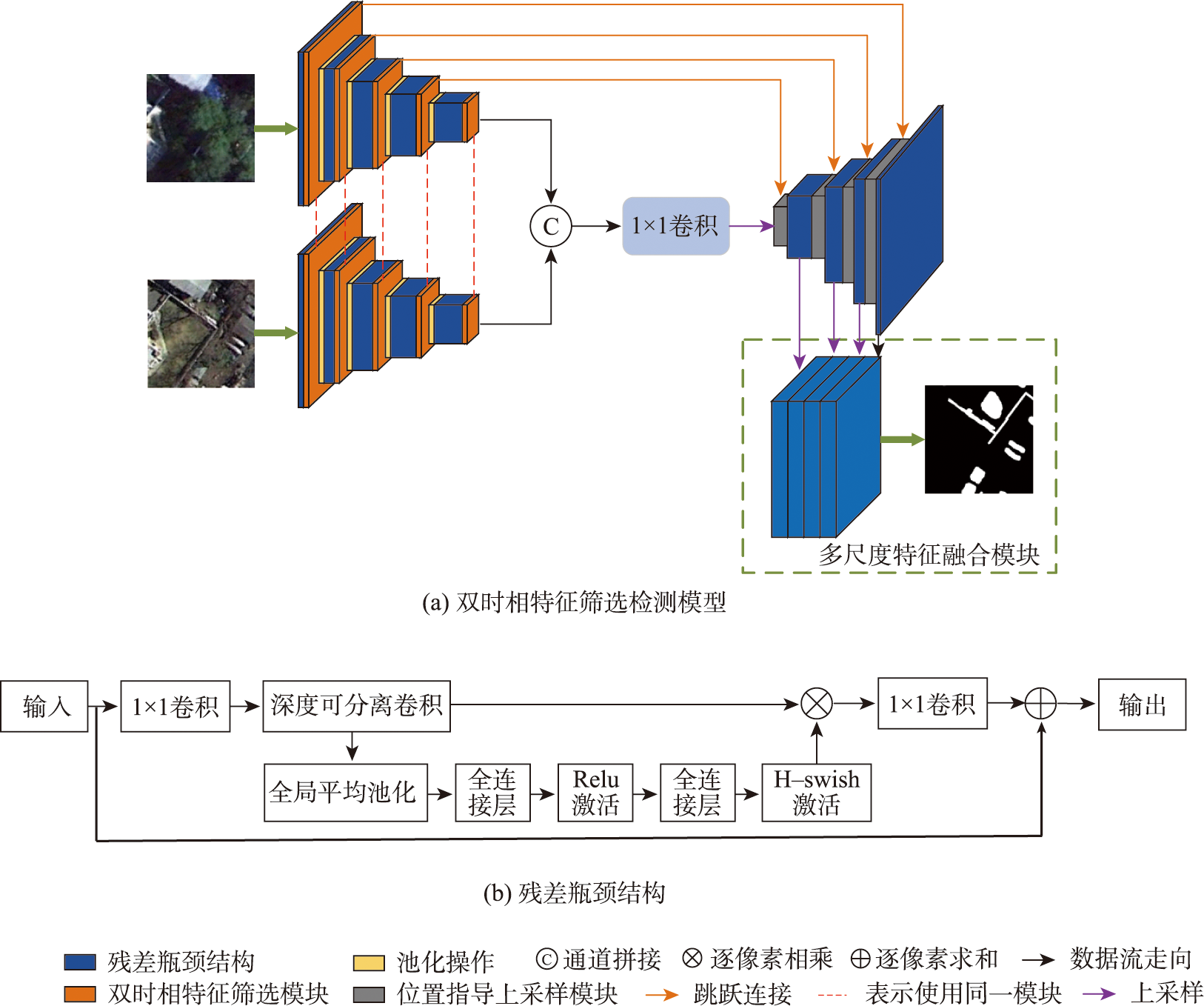

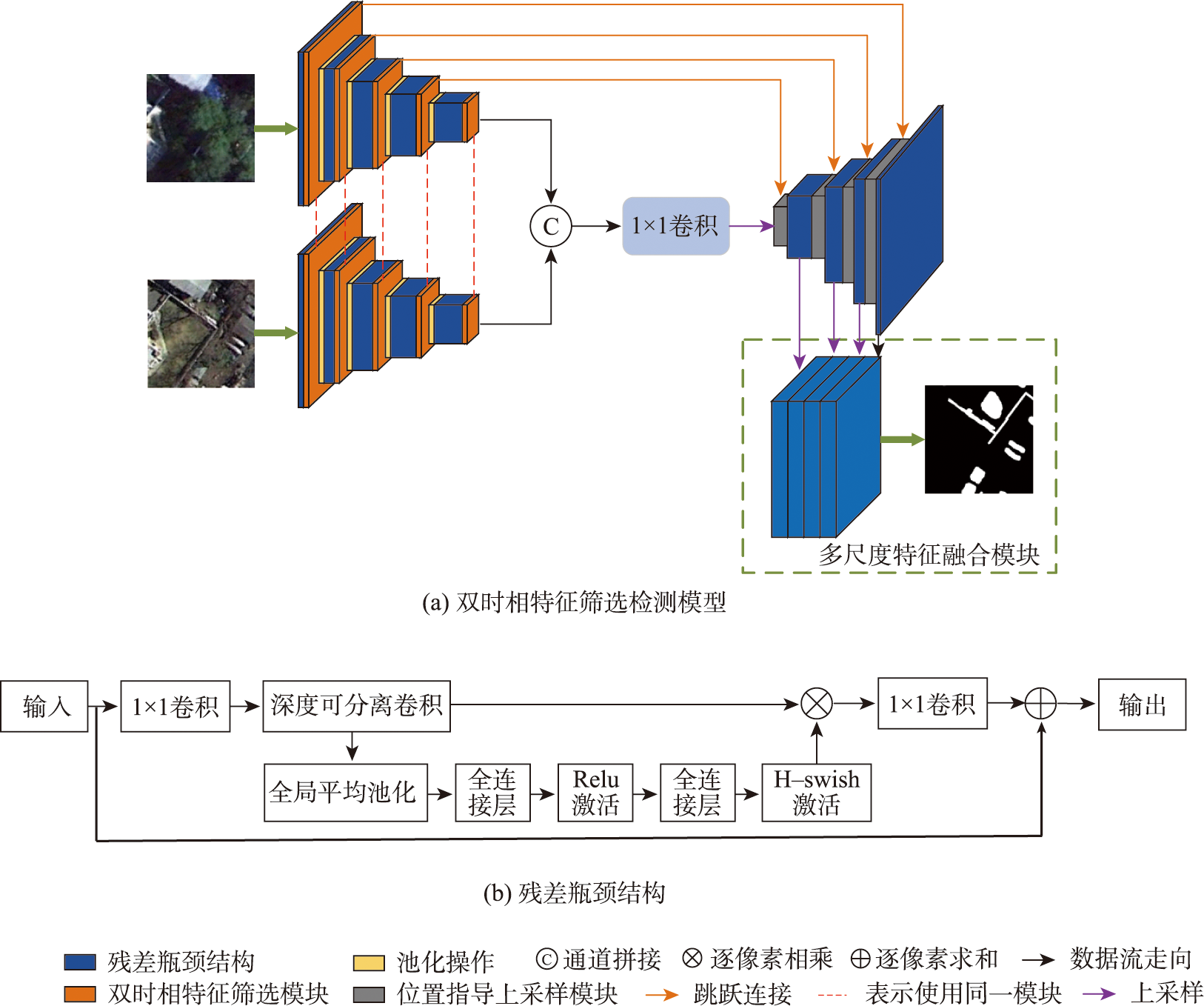

利用遥感图像变化检测对建筑物进行实时监测是国土资源环境部门调查管理工作开展的关键技术手段。本文针对遥感图像变化检测任务中未充分利用双时相图像间的依赖关系和空间细节信息丢失导致检测精度下降的问题,提出了一种基于双时相特征筛选的轻量级遥感图像变化检测模型。在编码部分,利用精简MobileNetV3分别提取双时相遥感图像不同层级的特征,将同级特征输入特征筛选模块后通过注意力机制和阈值筛选的方式建立双时相图像间的关系,生成更具判别性的特征。在解码部分,为解决普通上采样导致边界像素被分配错误的问题引入了位置指导上采样模块,通过与特征筛选模块协同工作利用双时相图像间的关系指导上采样过程。针对下采样操作导致空间细节信息丢失的问题,采用多尺度特征融合模块来聚合多级特征生成更具空间细节信息的变化图。通过在CDD和DSIFN变化检测数据集上的综合实验表明,本文所提模型在F1分数上分别达到89.42%和79.43%,模型计算量和参数量分别为5.72GFLOPs和1.89 MB,预测时间达到0.02 s,相较于其他模型在精度和实时性上均具有显著优势,更适合在移动端部署,且本文模型检测的可视化结果更为完整,对于变化边界的检测也更加平滑。

吴小所 , 王利玲 , 吴朝阳 , 郭存鸽 , 杨乐 , 闫浩文 . 基于双时相特征筛选的遥感图像变化检测模型[J]. 地球信息科学学报, 2023 , 25(11) : 2268 -2280 . DOI: 10.12082/dqxxkx.2023.230377

Real time monitoring of buildings using remote sensing image change detection is critical for the management and investigation work of land, resources, and environment departments. This study proposes a lightweight remote sensing image change detection model based on dual-temporal feature screening. This model is designed to solve the problem that the interdependency between dual-temporal images is not fully utilized in existing remote sensing image change detection tasks, and the detection accuracy is degraded due to the loss of spatial detail information. In the encoding part of the model, in order to reduce network size and improve latency, a simplified MobileNetV3 is used to extract features at different levels from dual-temporal remote sensing images. To fully utilize the spatiotemporal dependencies between dual-temporal remote sensing images in change detection tasks, a dual-temporal feature filtering module is proposed in the encoding part. The features at the same level are input into the feature filtering module to establish relationships between dual-temporal images through attention mechanisms and threshold filtering, generating more discriminative features and enhancing the model's ability to recognize changes and obtain global information. In the decoding part of the model, a position-guided upsampling module is introduced to solve the problem of incorrect assignation of boundary pixels with ordinary upsampling methods. By using the relationship between dual-temporal images to assign different weight coefficients for the feature maps output by DFSM, it is fused with the feature maps after upsampling and convolution to highlight useful information and suppress complex background information in remote sensing images. To address the issue of spatial detail information loss caused by downsampling operations, a multi-scale feature fusion module is proposed to aggregate multi-level features in the decoder and generate a change map with more spatial detail information. The effectiveness and real-time performance of our proposed model is verified based on CDD and DSIFN datasets, and compared with six advanced methods including FCN-PP, FDCNN, IFN, MSPSNet, SNUNet-CD, and DESSN for remote sensing image change detection. The experimental results show that the precision, recall, F1 scores, and IoU of the proposed model on the CDD dataset are 91.28%, 87.63%, 89.42%, and 81.34%, respectively. The parameter size, computational complexity, and prediction time are 1.89MB, 5.72GFLOPs, and 0.02s, respectively. Compared to these six models, the proposed model has significant advantages in terms of accuracy and real-time performance, making it particularly suitable for deployment on mobile devices. Also, the visualization results of the model detection in this study are more complete, and the detected change boundaries are smoother. This study demonstrates that the proposed model achieves a better balance between accuracy and real-time performance.

表1 DSIFN和CDD数据集上的消融实验结果Tab. 1 Results of ablation experiments on the DSIFN and CDD datasets (%) |

| Model | DSIFN | CDD | ||||||

|---|---|---|---|---|---|---|---|---|

| Precision Recall F1 IoU | Precision Recall F1 IoU | |||||||

| Baseline | 71.85 | 77.23 | 74.01 | 62.13 | 80.44 | 87.06 | 83.28 | 73.41 |

| Baseline+DFSM(T=0.4) | 76.31 | 78.39 | 77.79 | 64.76 | 88.72 | 85.54 | 87.08 | 78.72 |

| Baseline+DFSM(T=0.5) | 76.37 | 78.43 | 77.82 | 64.79 | 88.84 | 85.61 | 87.14 | 78.74 |

| Baseline+DFSM(T=0.6) | 76.41 | 78.31 | 77.78 | 64.74 | 88.92 | 85.45 | 87.07 | 78.71 |

| Baseline+PGUM | 75.25 | 83.07 | 77.96 | 65.74 | 86.03 | 87.30 | 86.65 | 77.97 |

| Baseline+DFSM+PGUM | 76.90 | 79.82 | 78.21 | 66.37 | 89.39 | 85.75 | 87.45 | 79.12 |

| DTFSNet(our) | 79.03 | 79.86 | 79.43 | 67.97 | 91.28 | 87.63 | 89.42 | 81.34 |

注:加粗数值为最优实验结果。 |

表2 在CDD数据集上的对比实验Tab. 2 Comparative experiments on the CDD dataset |

| Methods | FLOPs/GFLOPs | Params/MB | Time/s | Precision /% | Recall/% | F1s/% | IoU/% |

|---|---|---|---|---|---|---|---|

| FCN-PP | 34.65 | 28.13 | 0.19 | 82.64 | 80.60 | 81.61 | 70.10 |

| FDCNN | 32.40 | 1.86 | 0.08 | 87.51 | 83.20 | 85.18 | 76.07 |

| IFN | 112.15 | 43.50 | 0.15 | 87.90 | 83.34 | 87.44 | 79.77 |

| MSPSNet | 14.17 | 2.21 | 0.05 | 90.72 | 85.11 | 88.56 | 80.09 |

| SNUNet-CD | 33.04 | 12.03 | 0.11 | 93.26 | 84.39 | 88.64 | 80.84 |

| DESSN | 36.75 | 19.35 | 0.26 | 95.36 | 86.33 | 90.20 | 83.11 |

| DTFSNet(ours) | 5.72 | 1.89 | 0.02 | 91.28 | 87.63 | 89.42 | 81.34 |

注:加粗数值为各模型在CDD数据集上的最佳检测结果。 |

表3 在DSIFN数据集上的对比实验Tab. 3 Comparative experiments on the DSIFN dataset |

| Methods | Precision/% | Recall/% | F1s/% | IoU/% |

|---|---|---|---|---|

| FCN-PP | 56.40 | 67.03 | 61.26 | 45.74 |

| FDCNN | 69.08 | 79.39 | 70.93 | 57.04 |

| IFN | 69.41 | 80.40 | 71.19 | 57.24 |

| MSPSNet | 73.77 | 81.95 | 76.44 | 63.84 |

| SNUNet-CD | 76.50 | 82.82 | 78.94 | 67.05 |

| DESSN | 78.78 | 83.78 | 80.88 | 69.58 |

| DTFSNet(ours) | 79.03 | 79.86 | 79.43 | 67.97 |

注:加粗数值为各模型在DSIFN数据集上的最佳检测结果。 |

| [1] |

|

| [2] |

眭海刚, 冯文卿, 李文卓, 等. 多时相遥感影像变化检测方法综述[J]. 武汉大学学报·信息科学版, 2018, 43(12):1885-1898.

|

| [3] |

|

| [4] |

|

| [5] |

|

| [6] |

赵祥, 王涛, 张艳, 等. 基于改进DeepLabv3+孪生网络的遥感影像变化检测方法[J]. 地球信息科学学报, 2022, 24(8):1604-1616.

|

| [7] |

|

| [8] |

|

| [9] |

|

| [10] |

|

| [11] |

|

| [12] |

田青林, 秦凯, 陈俊, 等. 基于注意力金字塔网络的航空影像建筑物变化检测[J]. 光学学报, 2020, 40(21):47-56.

[

|

| [13] |

|

| [14] |

|

| [15] |

|

| [16] |

|

| [17] |

高建文, 管海燕, 彭代锋, 等. 基于局部-全局语义特征增强的遥感影像变化检测网络模型[J]. 地球信息科学学报, 2023, 25(3):625-637.

|

| [18] |

邵攀, 范红梅, 高梓昂. 基于自适应半监督模糊C均值的遥感变化检测[J]. 地球信息科学学报, 2022, 24(3):508-521.

|

| [19] |

|

| [20] |

|

| [21] |

|

| [22] |

|

| [23] |

|

| [24] |

|

| [25] |

|

| [26] |

|

| [27] |

黄震华, 杨顺志, 林威, 等. 知识蒸馏研究综述[J]. 计算机学报, 2022, 45(3):624-653.

|

| [28] |

|

| [29] |

|

| [30] |

|

| [31] |

|

| [32] |

|

| [33] |

|

| [34] |

|

/

| 〈 |

|

〉 |