Journal of Geo-information Science >

A Multi-Strategy Fusion Method for Aerial Image Feature Matching Considering Shadow and Viewing Angle Differences

Received date: 2025-03-04

Revised date: 2025-04-16

Online published: 2025-06-06

Supported by

National Key Laboratory of Intelligent Spatial Information Fund(a8235)

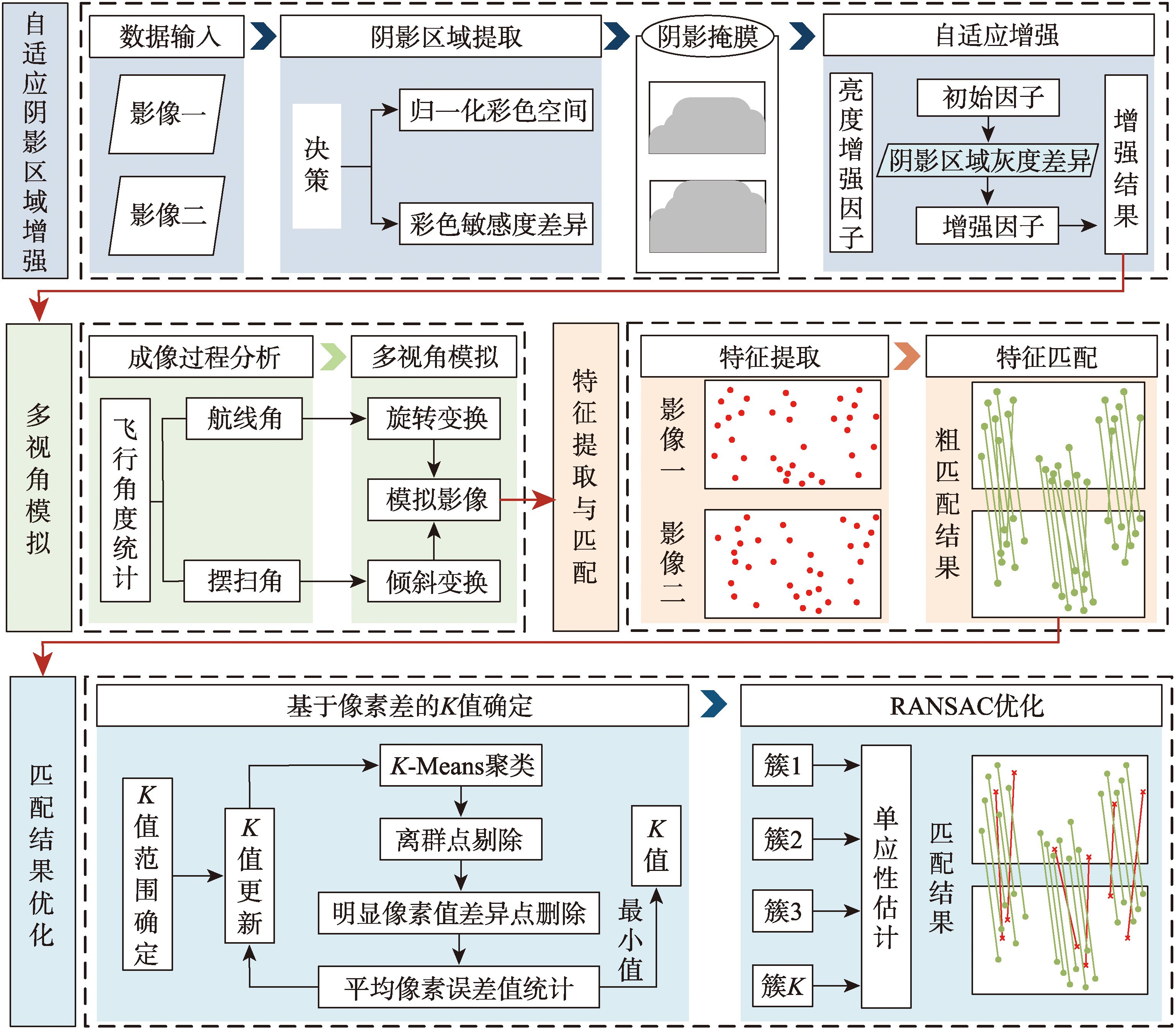

[Objectives] Feature matching is a core step in the 3D reconstruction of aerial images. However, due to shadows and perspective variations during the imaging process, the number of matching points is often small and unevenly distributed, significantly affecting accuracy. [Methods] This paper proposes a multi-strategy fusion feature matching method that accounts for shadow and viewing angle differences. It combines the traditional SIFT feature extraction algorithm with the advanced LightGlue feature matching neural network. Through multiple optimization strategies, the method achieves high-quality matching results under complex imaging conditions. The main improvements include the following: (1) An adaptive shadow region enhancement strategy is proposed. Shadow regions are extracted from the original image, and an initial brightness enhancement factor is determined based on the average brightness ratio of shadow and non-shadow areas. This factor is then adjusted using the gray-level differences within the shadow regions to enhance their brightness and restore ground object details, increasing the number of feature points. (2) A multi-view simulated image generation strategy is introduced. Simulated images are generated based on camera pose information to improve the adaptability of input features to view changes, enhancing matching accuracy and robustness. (3) In the matching optimization stage, due to significant height differences in aerial images, using a planar assumption for estimation introduces large errors. To address this, A RANSAC matching optimization algorithm based on K-Means clustering is developed. The number of clusters (K) is dynamically determined using the image's original color information. Matching points are clustered accordingly, and the RANSAC algorithm is applied to each cluster for local optimization. This reduces planar assumption errors and improves the selection of inliers. [Results] Experiments were conducted using aerial image data captured by the A3 camera, testing both single and combined strategies. Results show that after applying the adaptive shadow region enhancement and multi-view simulation strategies, the number of matching points nearly tripled compared to the unprocessed data. Additionally, after K-Means clustering RANSAC optimization, the average pixel distance error decreased by approximately 30% compared to direct RANSAC optimization, and the matching accuracy improved by an average of 24.8%. [Conclusions] The proposed method effectively addresses the challenges of aerial image matching under complex imaging conditions, providing more robust and reliable data support for downstream tasks such as 3D reconstruction.

Key words: feature matching; A3 digital aerial camera; SIFT; LightGlue; aerial image; RANSAC; cluster; K-Means

CHEN Chijie , WANG Tao , ZHANG Yan , YAN Siwei , ZHAO Kangshun . A Multi-Strategy Fusion Method for Aerial Image Feature Matching Considering Shadow and Viewing Angle Differences[J]. Journal of Geo-information Science, 2025 , 27(6) : 1401 -1419 . DOI: 10.12082/dqxxkx.2025.250099

利益冲突:Conflicts of Interest 所有作者声明不存在利益冲突。

All authors disclose no relevant conflicts of interest.

| [1] |

姬谕, 丁朋, 刘楠, 等. 基于改进SURF的低照度图像拼接方法[J]. 激光与光电子学进展, 2024, 61(18):1837014.

[

|

| [2] |

魏休耘, 甘淑, 袁希平, 等. 基于边缘响应优化SIFT算法在无人机影像匹配中的研究[J]. 测绘工程, 2024, 33(6):1-10.

[

|

| [3] |

张昆, 王涛, 张艳, 等. 一种基于面阵摆扫式航空影像的特征匹配方法[J]. 地球信息科学学报, 2022, 24(3): 522-532.

[

|

| [4] |

|

| [5] |

|

| [6] |

|

| [7] |

|

| [8] |

张胜国, 聂文泽, 饶维冬, 等. 基于重叠区域改进SURF的无人机影像快速匹配算法[J]. 无线电工程, 2024, 54(8):1978-1985.

[

|

| [9] |

|

| [10] |

|

| [11] |

|

| [12] |

|

| [13] |

|

| [14] |

|

| [15] |

|

| [16] |

|

| [17] |

|

| [18] |

佟国峰, 李勇, 刘楠, 等. 大仿射场景的混合特征提取与匹配[J]. 光学学报, 2017, 37(11):215-222.

[

|

| [19] |

岳娟, 高思莉, 李范鸣, 等. 具有近似仿射尺度不变特征的快速图像匹配[J]. 光学精密工程, 2020, 28(10):2349-2359.

[

|

| [20] |

王焱, 宋宇超, 吕猛. 基于改进算法的航拍图像匹配方法[J]. 计算机仿真, 2020, 37(2):258-262.

[

|

| [21] |

|

| [22] |

|

| [23] |

|

| [24] |

侯义锋, 丁畅, 刘海, 等. 逆光海况下低质量红外目标的增强与识别[J]. 光学学报, 2023, 43(6):226-238.

[

|

| [25] |

赵丹露, 张永安, 何光辉, 等. 透烟雾红外数字全息像的亮度增强算法[J]. 中国激光, 2023, 50(18):290-301.

[

|

| [26] |

|

| [27] |

|

| [28] |

王吉晖, 王亚伟, 许廷发, 等. 三维物体抗仿射变换特征匹配方法[J]. 北京理工大学学报, 2013, 33(11):1193-1197.

[

|

| [29] |

|

| [30] |

|

/

| 〈 |

|

〉 |